Forum tip: Always check when replies were posted. Technology evolves quickly, so some answers may not be up-to-date anymore.

-

How does CBB local file backups handle moved data?The reason that we use Legacy format for File backups, both cloud and local, is that they are incremental forever, meaning once a file gets backed up, it never gets backed up again unless it is modified.

The new backup format requires periodic "True full" backups, meaning that even the unchanged files have to be backed up again. Now it is true that the synthetic backup process will shorten the time to complete a "true full" backup, but why keep two copies of files that have not changed?

As I have said prior, unless you feel some compelling need to imitate Tape Backups with the GFS paradigm, the legacy format incremental forever is the only way to go for file level backups. -

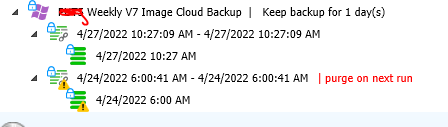

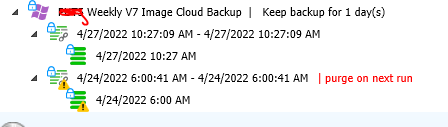

Retention Policy Problem with V7I updated the server to 7.5 and made sure that my retention was set to 1 day (as it had been). I forced a synthetic Full Image Backup.

I expected that since it has been four days since the last Full backup, that the four day old Full would get purged, but that did not happen.

It is behaving the same as the prior version - It always keeps two generations, where I was expecting to now only have to keep one.

See attached file for a screenshot of the Backup storage showing that both generations are still there.Attachment PUT5

(9K)

PUT5

(9K)

-

Retention Policy Problem with V7David,

Any chance this issue was addressed in the latest release 7.5? Really tired of spending $80+ per month to keep two full image/ VHDx copies in the cloud when we really only need one. -

CloudBerry Backup QuestionsNo you cannot. We use legacy format for file backups, and new format for Image and VHDX backups.

-

How does CBB local file backups handle moved data?No need to do repository sync. In either format, files would be backed up all over again, and the original location backups would be deleted from backup storage based on your retention setting for deleted files.

Synthetic fulls do not work for local storage, so move the files, run the backups, make sure you have a setting for purging of deleted files set and you will be fine.

The problem with the new format is that you have to do periodic true full backups (where all files get backed up regardless of whether they have been modified. And you have to keep at least two generations. That is not the case for the legacy format, so we use legacy for local and cloud file backups. We use the new format for Cloud image and VHDx backups as BackBlaze supports synthetic fulls, but we still need to keep two generations, which takes up twice the storage.

But it is worth it to get the backups completed in 75% less time thanks to the synthetic ful capability.

Sorry if this is confusing, -

Full backup to two storage accounts - how do they interactNo problem. Recommended in fact. We do this all the time. Local storage does not support synthetic backups, but locally connected, it isn’t necessary.

-

Restore from cloud to Hyper-V VM on on-premises hardware?So the short answer is that MSP360 does not have the ability to do what Recovery Console does (basically continuous replication via software).

You can certainly create a backup/restore sequence that would periodically backup up and then restore VHDx files, but it might get tricky from a timing standpoint. Perhaps David G. can comment on how one might set that up.

For us, the daily local VHDx backups, combined with "every-four-hour" local file backups provides an acceptable RPO/RTO for the majority of our SMB customers.

For our larger, more mission critical clients, we use HYPER-V replication.

Typically we use what we call the "trickle down servernomics model".

When a server needs to be replaced, the old one becomes the HYPERV replica - which provides recovery points every 10 minutes going 8 hours back.

It has served us well, and the cost is relatively low (given that the customer already owned the Replica Hardware. It does not perform as well, but in a Failover situation, it works adequately. -

Changing drive letter for backed up Windows directory hierarchySee the knowledge base article in the link below. Yes you can change the drive letter, and as long as the folder structure remains the same it will not backup everything again.

https://www.msp360.com/resources/blog/how-to-continue-backup-on-another-computer/amp/ -

MSP360 Restore Without Internet ConnectionYou need an internet connection to do a restore. If you have an internet connection you can do any type of restore; cloud or local. I do agree that if it is technically possible to permit a local restore from USB/ NAS device without an internet connection it would be nice. Question: if a local restore starts, and then we lose internet connection, will the restore finish?

-

Retention Policy Problem with V7Thanks David, for following up on this. FYI, I did discover that if I delete the oldest of the two generations and uncheck "Enable Full Consistency Check" box, that the synthetic full runs just fine with only a warning that some data is missing from storage.

Not worth the time and effort to do that every week for sure. -

Retention Policy Problem with V7Thanks. No need for incrementals, as fhese are Disaster Recovery images or VHDx files. A month or week old image is fine to get someone backup,and running, using these as a base plus daily file based backups

-

Retention Policy Problem with V7Very simple. Used to do full backups monthly with 3 day retention. Once newest full backup completed, the previous full got purged. Worked great.

-

Retention Policy Problem with V7This is becoming a large problem. While there is absolutely no reason to need to keep two fulls in Cloud storage of our Image backups, there is no way to keep only the latest one. So our storage costs have doubled from 17TB to 35TB each month. That equates to an additional $85/month, which might not seem like a lot, but we are a small MSP, and did not anticipate this added expense.

Have not heard anything back about whether this will be remedied in a future release, so am posting here to hopefully get an update. -

Change Legacy File Backup to only purgeWhenever we replace a server, and choose to re-upload all the data, we put a note on the calendar for 90 days out to remove the old server backup data., We use Cloudberry Explorer to do the deletions.

If you are not replacing the machine, but simply want to stop doing backups, keeping the same server/PC, then simply identify and delete the folders that you no longer need (after your retention period expires). You can do this kind of deletion from the server console (once you turn on the Organization: Companies:Agent Option that allow deletions from the console. -

Backup Plans run at computer startup - even when option not selectedYes they did get back to me finally - (thanks to you I suspect).

I keep having to explain to clients why their backup runs in the morning and why it fails.

Thanks. -

Incremental Forever and Synthetic FullAs an MSP customer, I whole-heartedly endorse what David G is saying.

The new format is great for Cloud Image and VHDx backups - as long as you are using a cloud vendor that supports Synthetic fulls. We previously used Google Nearline storage for the Image/VHDx backups, but they did not support synthetic fulls, so we moved them to Backblaze B2 (Not Backblaze S3 compliant) and it has ben absolutely fantastic!

Full Image/VHDx uploads to the cloud that used to take three days are done in 12 hours or less.

For standard file-based backups (cloud and local) , we plan to keep using the legacy format so that there is no need to re-upload the entire data-set. -

Searching for a file or files in backup historyLook. It does not, and has not, worked in the portal. It is important that MSP360 understands that when you have hundreds of clients you can’t log into their servers to look things up. The portal should be able to use a basic wildcard search function to find out what files got backed up and which files had a problem. Shouldn’t be rocket science, especially after seven+ years.

Steve Putnam

Start FollowingSend a Message

- Terms of Service

- Useful Hints and Tips

- Sign In

- © 2026 MSP360 Forum